A Patent was filed by Canon for Slow Shutter Bracketing. You can check it out below and see the diagrams from the patent above. I have also included an AI explanation of the patent below that.

overview

- [Publication number] P2025093064

- [Release date] 2025-06-23

- [Title of Invention] Imaging device and its control method and program

- [Application date] 2023-12-11

- [Applicant][Identification number] 000001007[Name or company name] Canon Inc.

- An imaging device capable of obtaining a plurality of images with different slow shutter expressions in response to a single shooting instruction, and a control method and program for the same are provided.

- [Background Art][0002]As a photography technique using long exposure, there is slow shutter photography, which captures the movement of a moving subject such as a waterfall or water flowing in a river. In slow shutter photography, the expression and impression of the photograph that can be taken changes depending on the length of the exposure time, so it is necessary to repeatedly shoot until a photo that is satisfactory to the user is captured. However, when repeatedly shooting while changing the exposure time, it is not possible to record images with different slow shutter expressions for the same scene.

- [0003]On the other hand, there are conventional techniques that realize pseudo long-second exposure. For example, Patent Document 1 discloses a method of synthesizing images taken repeatedly at specific intervals to achieve a specific brightness. Patent Document 2 discloses a method of recording images with different exposures of the same scene as bracket images by repeatedly aligning and additively synthesizing multiple taken images and storing the images.

- [0005]However, in the prior art of Patent Document 1, the brightness of the image changes, so images with different slow shutter expressions cannot be recorded. Also, in the prior art of Patent Document 2, the positioning is performed between the captured images, so images with slow shutter expressions that capture the movement of a moving subject cannot be recorded.

- [Patent Document 1] JP 2022-125117 A (OM Digital)

- [0006]The present invention has been made in consideration of the above-mentioned problems, and aims to provide an imaging device and a control method and program thereof that can obtain multiple images with different slow shutter expressions with a single shooting instruction.

- EFFECTS OF THE PRESENT EMBODIMENTS[0008]According to the present invention, a plurality of images with different slow shutter expressions can be obtained with a single shooting instruction.

AI Explanation of the Patent

Summary

Think of P2025093064 as Canon’s recipe for turning your camera into its own ND filter and tripod. By slicing a long exposure into many short, easily stabilised frames and smart-merging them with AI, it promises shake-free blur effects straight out of camera. If it makes it into a future EOS R-series body, landscape and city-scape shooters could leave the heavy glass filters—and even their tripods—at home.

What problem is Canon trying to solve?

- Long-exposure photography (think silky waterfalls or traffic-light trails) usually needs a neutral-density (ND) filter or very low light.

- ND filters are fiddly, easy to lose, and can introduce colour-cast or vignetting.

- Hand-holding multi-second exposures is impossible without blur.

- The patent’s goal is to let you capture the long-exposure look—hand-held and filter-free—by having the camera “fake” one long exposure from a burst of short ones.

Core idea in plain English

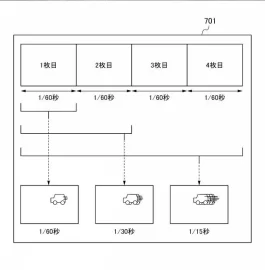

- Scene analysis. The camera quickly meters the scene and decides an overall “target shutter time” you want to simulate (for example, 2 s, 4 s, 8 s).

- Slow-shutter bracketing burst. Instead of opening the shutter for the whole 8 s, it fires a rapid burst of, say, 24 frames at 1/30 s each.

- AI alignment & merge.

- Built-in stabilisation data and deep-learning models line-up the frames down to the pixel—even if you’re hand-holding.

- Moving elements (water, clouds, traffic) are blended to look stretched and streaky.

- Stationary parts are stacked to stay tack-sharp.

- Automatic exposure guard-rails. The camera keeps each slice short enough that highlight clipping doesn’t happen, so you still get detail in bright areas.

- One finished JPEG/RAW. The processor outputs a single file that looks as if you’d used a real ND filter and tripod.

What hardware & software does it rely on?

|

Building block |

Why it matters |

Patent twist |

|---|---|---|

|

Fast read-out sensor |

Limits rolling-shutter skew between frames |

Sensor readout speed is factored into how many slices are used. |

|

In-body IS (IBIS) + lens IS |

Records motion data for frame alignment |

Gyro info feeds the AI merge stage. |

|

High-speed processor / on-chip AI cores |

Runs the de-shake + blending in real time |

Model adapts the number of frames if you pan intentionally. |

|

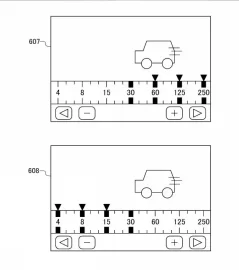

Firmware “Live ND” UI |

Lets you pick ND-equivalent strengths (ND2, ND4, ND8, ND16…) |

Auto mode can pick strength for you. |

Why bracketing instead of a straight electronic ND filter?

- Electronic ND shutters darken all frames, so you still need a tripod.

- Here each slice is short → sharp → easy to align; but when they’re merged the sum of exposures mimics a single long one.

- Because exposure is chopped up, highlights are never over-exposed—something a single 8-second shot might ruin.

Practical pay-offs

- Hand-held long exposures. Rivers, fountains, or street scenes without lugging a tripod.

- Cleaner high-sunlight shots. No colour shift or loss of corner sharpness that physical ND glass can introduce.

- Faster workflow. No filter screwing, no post-stacking; the camera spits out the finished file.

- Creative flexibility. Patent mentions letting you dial in virtual ND strengths or let the camera suggest one based on scene motion.

Hidden challenges the patent tackles

|

Challenge |

Patent’s counter-measure |

|---|---|

|

Ghosting from people/cars that move unpredictably |

AI detects “outlier” objects and either masks or fades them to avoid double images. |

|

Overheating from rapid bursts |

Firmware limits the burst length if sensor temperature rises. |

|

Excess memory use |

Only intermediate frames essential for the final blend are cached; the rest are discarded on-the-fly. |

via asobinet